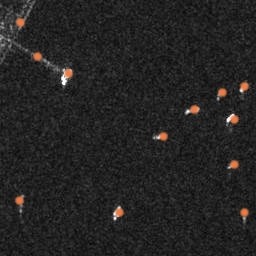

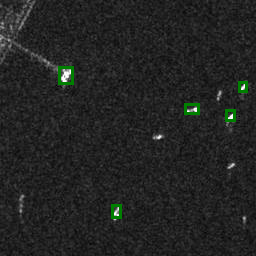

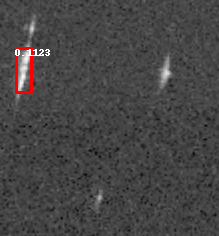

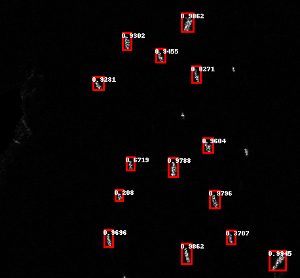

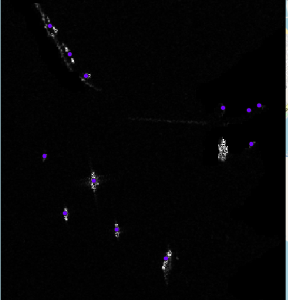

Sample images from the SAR dataset

Example image chips are included for better visualization of differences in polarizations and SAR imagery properties

SAR data/ imagery provides a number of advantages over traditional overhead imagery. SAR signals are synthetically emitted, and SAR data is generated based on the returning signal. Traditional overhead imagery, which depends on the light reflected off of the earth and atmosphere to create an image, cannot be taken at night, and there are also issues with clouds obscuring the images. The synthetic signals generated by SAR instruments can pass through clouds, so visuals are not obstructed by clouds and SAR data can be collected at night because it provides the source signal.

One potential use case for SAR imagery is ship detection in open water. Often times when ships are engaged in illegal fishing, they will turn off their Automatic Identification System (AIS) to fish undetected in illegal areas. Ship detection using SAR imagery can identify these ships.

SAR imagery is collected in four possible polarizations: VV, VH, HV, HH. We interpret the polarizations as "outgoing signal" "incoming signal". For example, VV is interpreted as vertical outgoing signal and vertical incoming signal and HV is interpreted as horizontal outgoing signal and vertical incoming signal. There is not a lot of research on which polarizations perform best in deep learning models. Therefore, our analysis includes testing for different polarizations and different combinations of polarizations. Co-polarization/ or combining different polarizations beforehand seems to lead to worse model performance and is abondoned early on in the research. For our results, we focus on the customized models trained for each type of polarization. As well as, a combined model capable of accepting any of the four polarizations as input. We compare and contrast between the model outputs.

To expand on the work done in a previous GA-CCRi project using SAR data to detect ships. The previous implementation relied on CFAR for detection. We implement deep learning and computer vision methods to pursue the same problem in hopes of reaching improved results. Additionally, we explore CycleGAN applications in order to create more labeled data and visualize and understand SAR imagery features.

Click on an image to compare results on different tests sets below and view CycleGAN imagery.

SAR is the up and coming mode of imagery and understanding it is critical to future applications of computer vision. Here we explored deep learning and how it can be used to detect ships in SAR imagery. Through implementing a novel modification to a well known Faster R-CNN neural network, we were able to more accurately detect ships present in SAR data. Furthermore, in addition to providing researchers with even more labeled data, exploring the application of Cycle GANs allows us to better understand certain ship features that result in brighter pixels within SAR imagery.

The results are a clear indicator that the combined model capable of accepting all 4 polarizations and trained on data from all 4 polarizations had the best accuracy metrics.

Improved GA-CCRi SAR ship detection tools and added a novel CycleGAN tool that can provide more labeled data and more interpertability to the deep learning architecture released.

Training and validation data are obtained from the SAR-Ship-Dataset which contains (38760 labeled polarized images, 59535 total ship instances) from Sentinel-1 and GAO satellites. The dataset contains images with a 3 meter, 6 meter and 5 meter GSD. Most test data is downloaded directly from Copernicus and includes Sentinel-1 images preprocessed using some of Erin Ryan's work while at GA-CCRi (Imaging and AIS). The cyclegan is trained on the same SAR dataset and the following optical imagery dataset

Example image chips are included for better visualization of differences in polarizations and SAR imagery properties

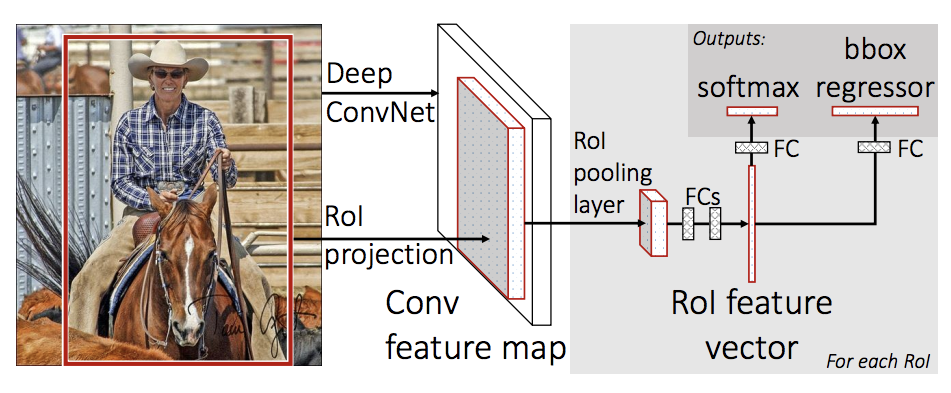

The Model used is a Faster R-CNN model using a resnet50 backbone. The winning model was built to have 4 different initial conv layers that depended on the polarization of the input image. The rest of the architecture remained the same. The model predicted both bounding boxes and class scores. The model was implemented using Pytorch.

The object detection model has been deployed via flask and heroku, a set of test images has been provided that will run the model in realtime and return the predictions. Please press on any of the buttons below to test the functionality. Additionally, if you have any SAR ship data you can choose the image and the model will run on it.

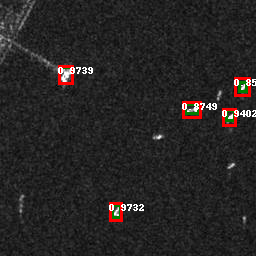

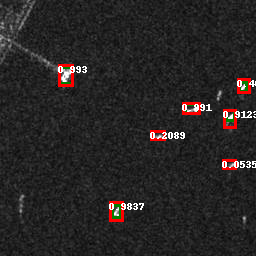

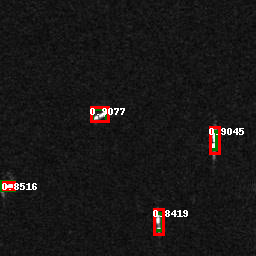

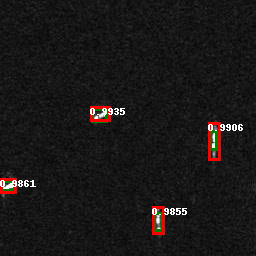

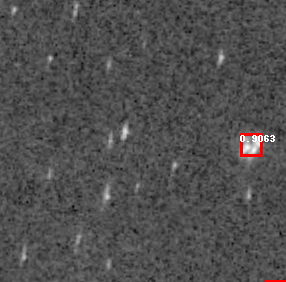

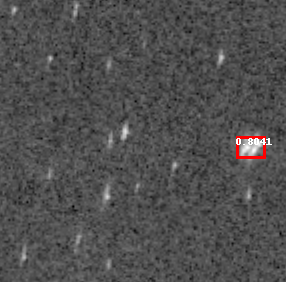

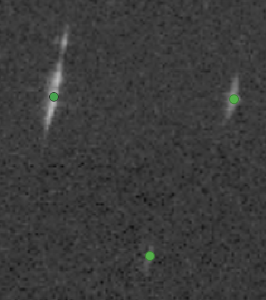

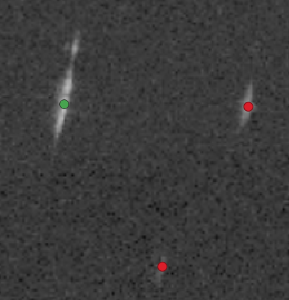

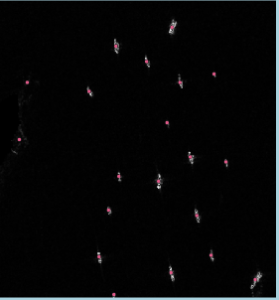

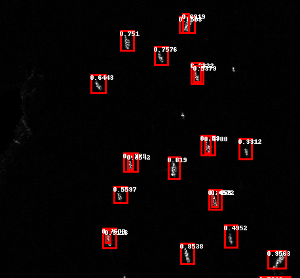

The following test dataset was part of the originally retreived SAR-Ship Dataset. This set has ground truth values which can be seen in the right most image. Only true boats are showin in green. The outputs from the different models are also shown. The CFAR model is the baseline model already implemented at GA-CCRi, the combined model is the model that can accept any of the four polarizations as input and is trained on all the data, finally, the VV model is the model trained on only VV imagery. These test images were of the VV polarization. It is clear that the combined model is the most accurate as the VV model has two additional false positives. CFAR is by far the worst.

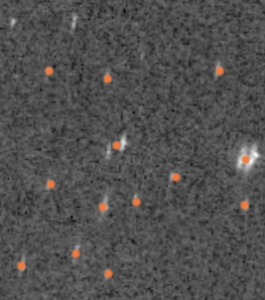

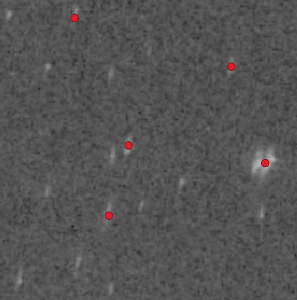

The following test dataset was taken from the new XView competition which mainly featured SAR imagery with 10 meter GSD . This set has ground truth values which can be seen in the right most image, non-ships are either in red or not highlighted, ships are seen in green. The outputs from the different models are also shown. The CFAR model is the baseline model already implemented at GA-CCRi, the combined model is the model that can accept any of the four polarizations as input and is trained on all the data, finally, the VV model is the model trained on only VV imagery. These test images were of the VV polarization. It is clear that the combined model is the most accurate here again. CFAR is by far the worst.

The following test dataset was taken directly from Sentinel-1 live via Copernicus Hub. This set does not have ground truth values. Outputs from each model are shown The CFAR model is the baseline model already implemented at GA-CCRi, the combined model is the model that can accept any of the four polarizations as input and is trained on all the data, finally, the VV model is the model trained on only VV imagery. These test images were of the VV polarization. It is not clear here which performs best without proper authentication through AIS data.

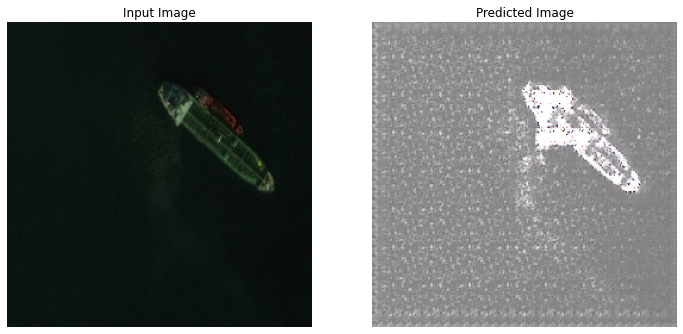

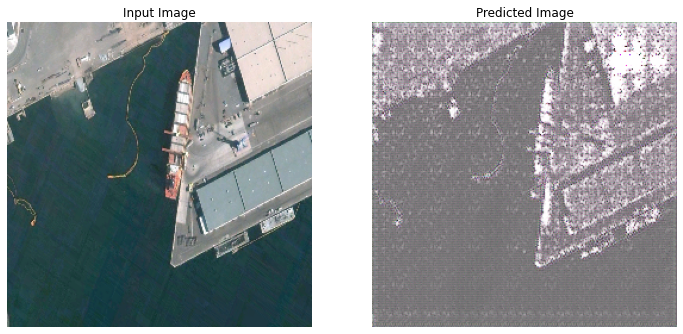

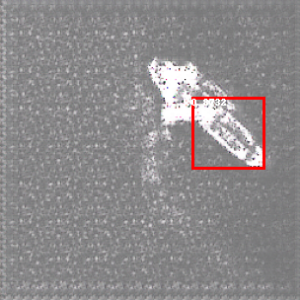

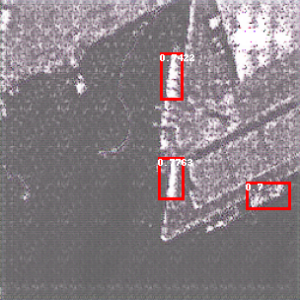

Below you will find the results of a CycleGAN trained to predict what an optical image would look like if it was SAR. Exploring this method allows us to understand how certain features in an optical image change in radar imagery. These predicted SAR images were ran through the combined model and the results are also added to the end to show that this method is worth further exploration in the future.